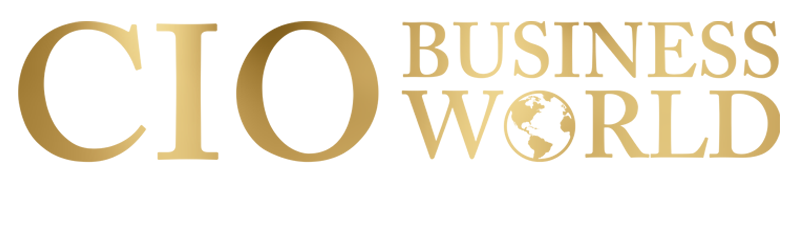

In tech world where technology is increasingly reaching out to young people, Meta has taken an important step to prevent its AI chatbots from discussing suicide with teenagers. This policy modification is intended to lessen the likelihood of creating sensitive mental health circumstances in impressionable minds.

Meta’s move comes amid increased worry over how AI-powered tools address mental health issues. Meta hopes that by limiting chatbot answers to suicide among young users, it can direct vulnerable individuals to safer, more appropriate channels for assistance.

Meta’s Policy Shift on AI Conversations

Meta’s updated guidelines instruct its AI systems to refrain from engaging in suicide-related conversations when interacting with users who appear to be under 18. Instead, the chatbots will provide calming messages and direct teens to professional mental health services, such as hotlines or crisis text lines.

Under the new policy, if a conversation veers toward self-harm or suicidal thoughts, the AI chatbot will pivot instantly. The system is engineered to focus on empathy and assistance, without engaging in detailed discussions that might inadvertently cause harm.

This measure reflects growing industry emphasis on responsible AI deployment, especially concerning young people’s mental health. Experts had long warned that unsupervised AI conversations on sensitive topics could lead to emotional distress for minors.

Meta’s promise also involves regular inspections and modifications to the chatbot’s behavior to ensure compliance and efficacy. The startup intends to employ feedback loops from users and mental health specialists to improve the system over time. As AI technologies advance, so are the complications of protecting mental health particularly among adolescent users.

More Than Just a Safety Net

Although limiting chatbot conversations on suicide may sound restrictive, Meta emphasizes that the measure is not about censorship. Instead, it’s presented as a protective layern one that avoids potentially dangerous discussions in favor of redirecting teens to trusted human-led support.

Meta’s team also stated that the policy will come with training improvements for AI, make sure the chatbots express empathy correctly avoid triggering language, and respond immediately to crisis indicators. The company is rolling out the changes incrementally, monitoring user interactions closely before full-scale implementation.

Meta’s policy revision

Meta’s policy revision is straightforward but powerful: when it comes to teen mental health, the company chooses caution and connection over risk. Meta stops AI chatbots from deep discussions on suicide with minors, ensuring vulnerable users are guided toward professional help instead.

With “Meta stops AI chatbots” as our guiding keyword, this news is optimised for clear understanding, search visibility & most importantly teen safety.

Also Read: Kenya’s Inflation Jumps in August on Food and Transport Costs